HPC - High Performance Computing

HPC - High Performance Computing

A collection of common HPC (High Performance Computing) benchmarks.

See how your system performs with this suite using the Phoronix Test Suite. It's as easy as running the phoronix-test-suite benchmark hpc command..

Tests In This Suite

ACES DGEMM

AI Benchmark Alpha

Algebraic Multi-Grid Benchmark

ArrayFire

- Test: BLAS CPU

ASKAP

- Test: tConvolve OpenCL

- Test: tConvolve CUDA

- Test: tConvolve MPI

- Test: tConvolve OpenMP

- Test: tConvolve MT

- Test: Hogbom Clean OpenMP

Caffe

- Model: AlexNet - Acceleration: CPU - Iterations: 100

- Model: AlexNet - Acceleration: CPU - Iterations: 200

- Model: AlexNet - Acceleration: CPU - Iterations: 1000

- Model: GoogleNet - Acceleration: CPU - Iterations: 100

- Model: GoogleNet - Acceleration: CPU - Iterations: 200

- Model: GoogleNet - Acceleration: CPU - Iterations: 1000

CloverLeaf

- Input: clover_bm

- Input: clover_bm64_short

- Input: clover_bm16

CP2K Molecular Dynamics

- Input: Fayalite-FIST

- Input: H20-64

- Input: H20-256

Darmstadt Automotive Parallel Heterogeneous Suite

- Backend: OpenMP - Kernel: Euclidean Cluster

- Backend: OpenMP - Kernel: NDT Mapping

- Backend: OpenMP - Kernel: Points2Image

- Backend: OpenCL - Kernel: Euclidean Cluster

- Backend: OpenCL - Kernel: NDT Mapping

- Backend: OpenCL - Kernel: Points2Image

- Backend: NVIDIA CUDA - Kernel: Euclidean Cluster

- Backend: NVIDIA CUDA - Kernel: NDT Mapping

- Backend: NVIDIA CUDA - Kernel: Points2Image

DeepSpeech

- Acceleration: CPU

Dolfyn

easyWave

- Input: e2Asean Grid + BengkuluSept2007 Source - Time: 240

- Input: e2Asean Grid + BengkuluSept2007 Source - Time: 1200

- Input: e2Asean Grid + BengkuluSept2007 Source - Time: 2400

ECP-CANDLE

- Benchmark: P1B2

- Benchmark: P3B1

- Benchmark: P3B2

Epoch

- Epoch3D Deck: Cone

Faiss

- Test: demo_sift1M

- Test: bench_polysemous_sift1m

FFTE

- Test: N=256, 1D Complex FFT Routine

FFTW

- Build: Stock - Size: 1D FFT Size 32

- Build: Stock - Size: 1D FFT Size 4096

- Build: Stock - Size: 2D FFT Size 32

- Build: Stock - Size: 2D FFT Size 4096

- Build: Float + SSE - Size: 1D FFT Size 32

- Build: Float + SSE - Size: 1D FFT Size 4096

- Build: Float + SSE - Size: 2D FFT Size 32

- Build: Float + SSE - Size: 2D FFT Size 4096

GNU Octave Benchmark

GPAW

- Input: Carbon Nanotube

Graph500

- Scale: 26

- Scale: 29

GROMACS

- Implementation: MPI CPU - Input: water_GMX50_bare

- Implementation: NVIDIA CUDA GPU - Input: water_GMX50_bare

HeFFTe - Highly Efficient FFT for Exascale

- Test: r2c - Backend: Stock - Precision: float - X Y Z: 128

- Test: r2c - Backend: Stock - Precision: float - X Y Z: 256

- Test: r2c - Backend: Stock - Precision: float - X Y Z: 512

- Test: r2c - Backend: Stock - Precision: float - X Y Z: 1024

- Test: r2c - Backend: Stock - Precision: float-long - X Y Z: 128

- Test: r2c - Backend: Stock - Precision: float-long - X Y Z: 256

- Test: r2c - Backend: Stock - Precision: float-long - X Y Z: 512

- Test: r2c - Backend: Stock - Precision: float-long - X Y Z: 1024

- Test: r2c - Backend: Stock - Precision: double - X Y Z: 128

- Test: r2c - Backend: Stock - Precision: double - X Y Z: 256

- Test: r2c - Backend: Stock - Precision: double - X Y Z: 512

- Test: r2c - Backend: Stock - Precision: double - X Y Z: 1024

- Test: r2c - Backend: Stock - Precision: double-long - X Y Z: 128

- Test: r2c - Backend: Stock - Precision: double-long - X Y Z: 256

- Test: r2c - Backend: Stock - Precision: double-long - X Y Z: 512

- Test: r2c - Backend: Stock - Precision: double-long - X Y Z: 1024

- Test: r2c - Backend: FFTW - Precision: float - X Y Z: 128

- Test: r2c - Backend: FFTW - Precision: float - X Y Z: 256

- Test: r2c - Backend: FFTW - Precision: float - X Y Z: 512

- Test: r2c - Backend: FFTW - Precision: float - X Y Z: 1024

- Test: r2c - Backend: FFTW - Precision: float-long - X Y Z: 128

- Test: r2c - Backend: FFTW - Precision: float-long - X Y Z: 256

- Test: r2c - Backend: FFTW - Precision: float-long - X Y Z: 512

- Test: r2c - Backend: FFTW - Precision: float-long - X Y Z: 1024

- Test: r2c - Backend: FFTW - Precision: double - X Y Z: 128

- Test: r2c - Backend: FFTW - Precision: double - X Y Z: 256

- Test: r2c - Backend: FFTW - Precision: double - X Y Z: 512

- Test: r2c - Backend: FFTW - Precision: double - X Y Z: 1024

- Test: r2c - Backend: FFTW - Precision: double-long - X Y Z: 128

- Test: r2c - Backend: FFTW - Precision: double-long - X Y Z: 256

- Test: r2c - Backend: FFTW - Precision: double-long - X Y Z: 512

- Test: r2c - Backend: FFTW - Precision: double-long - X Y Z: 1024

- Test: c2c - Backend: Stock - Precision: float - X Y Z: 128

- Test: c2c - Backend: Stock - Precision: float - X Y Z: 256

- Test: c2c - Backend: Stock - Precision: float - X Y Z: 512

- Test: c2c - Backend: Stock - Precision: float - X Y Z: 1024

- Test: c2c - Backend: Stock - Precision: float-long - X Y Z: 128

- Test: c2c - Backend: Stock - Precision: float-long - X Y Z: 256

- Test: c2c - Backend: Stock - Precision: float-long - X Y Z: 512

- Test: c2c - Backend: Stock - Precision: float-long - X Y Z: 1024

- Test: c2c - Backend: Stock - Precision: double - X Y Z: 128

- Test: c2c - Backend: Stock - Precision: double - X Y Z: 256

- Test: c2c - Backend: Stock - Precision: double - X Y Z: 512

- Test: c2c - Backend: Stock - Precision: double - X Y Z: 1024

- Test: c2c - Backend: Stock - Precision: double-long - X Y Z: 128

- Test: c2c - Backend: Stock - Precision: double-long - X Y Z: 256

- Test: c2c - Backend: Stock - Precision: double-long - X Y Z: 512

- Test: c2c - Backend: Stock - Precision: double-long - X Y Z: 1024

- Test: c2c - Backend: FFTW - Precision: float - X Y Z: 128

- Test: c2c - Backend: FFTW - Precision: float - X Y Z: 256

- Test: c2c - Backend: FFTW - Precision: float - X Y Z: 512

- Test: c2c - Backend: FFTW - Precision: float - X Y Z: 1024

- Test: c2c - Backend: FFTW - Precision: float-long - X Y Z: 128

- Test: c2c - Backend: FFTW - Precision: float-long - X Y Z: 256

- Test: c2c - Backend: FFTW - Precision: float-long - X Y Z: 512

- Test: c2c - Backend: FFTW - Precision: float-long - X Y Z: 1024

- Test: c2c - Backend: FFTW - Precision: double - X Y Z: 128

- Test: c2c - Backend: FFTW - Precision: double - X Y Z: 256

- Test: c2c - Backend: FFTW - Precision: double - X Y Z: 512

- Test: c2c - Backend: FFTW - Precision: double - X Y Z: 1024

- Test: c2c - Backend: FFTW - Precision: double-long - X Y Z: 128

- Test: c2c - Backend: FFTW - Precision: double-long - X Y Z: 256

- Test: c2c - Backend: FFTW - Precision: double-long - X Y Z: 512

- Test: c2c - Backend: FFTW - Precision: double-long - X Y Z: 1024

High Performance Conjugate Gradient

- X Y Z: 104 104 104 - RT: 60

- X Y Z: 104 104 104 - RT: 1800

- X Y Z: 144 144 144 - RT: 60

- X Y Z: 144 144 144 - RT: 1800

- X Y Z: 160 160 160 - RT: 60

- X Y Z: 160 160 160 - RT: 1800

- X Y Z: 192 192 192 - RT: 60

- X Y Z: 192 192 192 - RT: 1800

Himeno Benchmark

HPC Challenge

- Test / Class: G-HPL

- Test / Class: G-Ptrans

- Test / Class: G-Random Access

- Test / Class: G-Ffte

- Test / Class: EP-STREAM Triad

- Test / Class: EP-DGEMM

- Test / Class: Random Ring Latency

- Test / Class: Random Ring Bandwidth

- Test / Class: Max Ping Pong Bandwidth

HPL Linpack

Intel MPI Benchmarks

- Test: IMB-MPI1 PingPong

- Test: IMB-MPI1 Sendrecv

- Test: IMB-MPI1 Exchange

- Test: IMB-P2P PingPong

Kripke

Laghos

- Test: Sedov Blast Wave, ube_922_hex.mesh

- Test: Triple Point Problem

LAMMPS Molecular Dynamics Simulator

- Model: Rhodopsin Protein

- Model: 20k Atoms

LeelaChessZero

- Backend: BLAS

libxsmm

- M N K: 32

- M N K: 64

- M N K: 128

- M N K: 256

LiteRT

- Model: Mobilenet Float

- Model: Mobilenet Quant

- Model: NASNet Mobile

- Model: SqueezeNet

- Model: Inception ResNet V2

- Model: Inception V4

- Model: Quantized COCO SSD MobileNet v1

- Model: DeepLab V3

Llama.cpp

- Model: llama-2-7b.Q4_0.gguf

- Model: llama-2-13b.Q4_0.gguf

- Model: llama-2-70b-chat.Q5_0.gguf

Llamafile

- Test: mistral-7b-instruct-v0.2.Q8_0 - Acceleration: CPU

- Test: llava-v1.5-7b-q4 - Acceleration: CPU

- Test: wizardcoder-python-34b-v1.0.Q6_K - Acceleration: CPU

LULESH

miniBUDE

- Implementation: OpenMP - Input Deck: BM1

- Implementation: OpenMP - Input Deck: BM2

miniFE

- Problem Size: Small

- Problem Size: Medium

- Problem Size: Large

Mlpack Benchmark

- Benchmark: scikit_svm

- Benchmark: scikit_linearridgeregression

- Benchmark: scikit_qda

- Benchmark: scikit_ica

Mobile Neural Network

Monte Carlo Simulations of Ionised Nebulae

- Input: Dust 2D tau100.0

- Input: Gas HII40

NAMD

- Input: ATPase with 327,506 Atoms

- Input: STMV with 1,066,628 Atoms

NAS Parallel Benchmarks

- Test / Class: BT.C

- Test / Class: EP.C

- Test / Class: EP.D

- Test / Class: FT.C

- Test / Class: LU.C

- Test / Class: SP.B

- Test / Class: SP.C

- Test / Class: IS.D

- Test / Class: MG.C

- Test / Class: CG.C

NCNN

- Target: CPU

- Target: Vulkan GPU

Nebular Empirical Analysis Tool

nekRS

- Input: TurboPipe Periodic

- Input: Kershaw

Neural Magic DeepSparse

- Model: NLP Text Classification, BERT base uncased SST2, Sparse INT8 - Scenario: Synchronous Single-Stream

- Model: NLP Text Classification, BERT base uncased SST2, Sparse INT8 - Scenario: Asynchronous Multi-Stream

- Model: NLP Text Classification, DistilBERT mnli - Scenario: Synchronous Single-Stream

- Model: NLP Text Classification, DistilBERT mnli - Scenario: Asynchronous Multi-Stream

- Model: CV Classification, ResNet-50 ImageNet - Scenario: Synchronous Single-Stream

- Model: CV Classification, ResNet-50 ImageNet - Scenario: Asynchronous Multi-Stream

- Model: NLP Token Classification, BERT base uncased conll2003 - Scenario: Synchronous Single-Stream

- Model: NLP Token Classification, BERT base uncased conll2003 - Scenario: Asynchronous Multi-Stream

- Model: CV Detection, YOLOv5s COCO, Sparse INT8 - Scenario: Synchronous Single-Stream

- Model: CV Detection, YOLOv5s COCO, Sparse INT8 - Scenario: Asynchronous Multi-Stream

- Model: NLP Document Classification, oBERT base uncased on IMDB - Scenario: Synchronous Single-Stream

- Model: NLP Document Classification, oBERT base uncased on IMDB - Scenario: Asynchronous Multi-Stream

- Model: CV Segmentation, 90% Pruned YOLACT Pruned - Scenario: Synchronous Single-Stream

- Model: CV Segmentation, 90% Pruned YOLACT Pruned - Scenario: Asynchronous Multi-Stream

- Model: ResNet-50, Baseline - Scenario: Synchronous Single-Stream

- Model: ResNet-50, Baseline - Scenario: Asynchronous Multi-Stream

- Model: ResNet-50, Sparse INT8 - Scenario: Synchronous Single-Stream

- Model: ResNet-50, Sparse INT8 - Scenario: Asynchronous Multi-Stream

- Model: BERT-Large, NLP Question Answering, Sparse INT8 - Scenario: Synchronous Single-Stream

- Model: BERT-Large, NLP Question Answering, Sparse INT8 - Scenario: Asynchronous Multi-Stream

- Model: Llama2 Chat 7b Quantized - Scenario: Synchronous Single-Stream

- Model: Llama2 Chat 7b Quantized - Scenario: Asynchronous Multi-Stream

Numenta Anomaly Benchmark

- Detector: Bayesian Changepoint

- Detector: Windowed Gaussian

- Detector: Relative Entropy

- Detector: Earthgecko Skyline

- Detector: KNN CAD

- Detector: Contextual Anomaly Detector OSE

Numpy Benchmark

NWChem

- Input: C240 Buckyball

oneDNN

- Harness: Convolution Batch Shapes Auto - Engine: CPU

- Harness: Deconvolution Batch shapes_1d - Engine: CPU

- Harness: Deconvolution Batch shapes_3d - Engine: CPU

- Harness: IP Shapes 1D - Engine: CPU

- Harness: IP Shapes 3D - Engine: CPU

- Harness: Recurrent Neural Network Training - Engine: CPU

- Harness: Recurrent Neural Network Inference - Engine: CPU

ONNX Runtime

- Model: yolov4 - Device: CPU - Executor: Standard

- Model: yolov4 - Device: CPU - Executor: Parallel

- Model: fcn-resnet101-11 - Device: CPU - Executor: Standard

- Model: fcn-resnet101-11 - Device: CPU - Executor: Parallel

- Model: super-resolution-10 - Device: CPU - Executor: Standard

- Model: super-resolution-10 - Device: CPU - Executor: Parallel

- Model: bertsquad-12 - Device: CPU - Executor: Standard

- Model: bertsquad-12 - Device: CPU - Executor: Parallel

- Model: GPT-2 - Device: CPU - Executor: Standard

- Model: GPT-2 - Device: CPU - Executor: Parallel

- Model: ArcFace ResNet-100 - Device: CPU - Executor: Standard

- Model: ArcFace ResNet-100 - Device: CPU - Executor: Parallel

- Model: ResNet50 v1-12-int8 - Device: CPU - Executor: Standard

- Model: ResNet50 v1-12-int8 - Device: CPU - Executor: Parallel

- Model: CaffeNet 12-int8 - Device: CPU - Executor: Standard

- Model: CaffeNet 12-int8 - Device: CPU - Executor: Parallel

- Model: Faster R-CNN R-50-FPN-int8 - Device: CPU - Executor: Standard

- Model: Faster R-CNN R-50-FPN-int8 - Device: CPU - Executor: Parallel

- Model: T5 Encoder - Device: CPU - Executor: Standard

- Model: T5 Encoder - Device: CPU - Executor: Parallel

- Model: ZFNet-512 - Device: CPU - Executor: Standard

- Model: ZFNet-512 - Device: CPU - Executor: Parallel

- Model: ResNet101_DUC_HDC-12 - Device: CPU - Executor: Standard

- Model: ResNet101_DUC_HDC-12 - Device: CPU - Executor: Parallel

OpenCV

- Test: DNN - Deep Neural Network

OpenFOAM

- Input: motorBike

- Input: drivaerFastback, Small Mesh Size

- Input: drivaerFastback, Medium Mesh Size

- Input: drivaerFastback, Large Mesh Size

OpenRadioss

- Model: Bird Strike on Windshield

- Model: Rubber O-Ring Seal Installation

- Model: Cell Phone Drop Test

- Model: Bumper Beam

- Model: INIVOL and Fluid Structure Interaction Drop Container

- Model: Chrysler Neon 1M

- Model: Ford Taurus 10M

OpenVINO

- Model: Face Detection FP16 - Device: CPU

- Model: Face Detection FP16-INT8 - Device: CPU

- Model: Age Gender Recognition Retail 0013 FP16 - Device: CPU

- Model: Age Gender Recognition Retail 0013 FP16-INT8 - Device: CPU

- Model: Person Detection FP16 - Device: CPU

- Model: Person Detection FP32 - Device: CPU

- Model: Weld Porosity Detection FP16-INT8 - Device: CPU

- Model: Weld Porosity Detection FP16 - Device: CPU

- Model: Vehicle Detection FP16-INT8 - Device: CPU

- Model: Vehicle Detection FP16 - Device: CPU

- Model: Person Vehicle Bike Detection FP16 - Device: CPU

- Model: Machine Translation EN To DE FP16 - Device: CPU

- Model: Face Detection Retail FP16 - Device: CPU

- Model: Face Detection Retail FP16-INT8 - Device: CPU

- Model: Handwritten English Recognition FP16 - Device: CPU

- Model: Handwritten English Recognition FP16-INT8 - Device: CPU

- Model: Road Segmentation ADAS FP16 - Device: CPU

- Model: Road Segmentation ADAS FP16-INT8 - Device: CPU

- Model: Person Re-Identification Retail FP16 - Device: CPU

- Model: Noise Suppression Poconet-Like FP16 - Device: CPU

OpenVINO GenAI

- Model: TinyLlama-1.1B-Chat-v1.0 - Device: CPU

- Model: Phi-3-mini-128k-instruct-int4-ov - Device: CPU

- Model: Falcon-7b-instruct-int4-ov - Device: CPU

- Model: Gemma-7b-int4-ov - Device: CPU

Palabos

- Grid Size: 100

- Grid Size: 400

- Grid Size: 500

- Grid Size: 1000

- Grid Size: 4000

Parboil

- Test: OpenMP CUTCP

- Test: OpenMP MRI-Q

- Test: OpenMP MRI Gridding

- Test: OpenMP Stencil

- Test: OpenMP LBM

Pennant

- Test: leblancbig

- Test: sedovbig

PETSc

- Test: Streams

PlaidML

- FP16: No - Mode: Inference - Network: ResNet 50 - Device: CPU

- FP16: No - Mode: Inference - Network: VGG16 - Device: CPU

PyHPC Benchmarks

- Device: CPU - Backend: Aesara - Project Size: 16384 - Benchmark: Equation of State

- Device: CPU - Backend: Aesara - Project Size: 16384 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: Aesara - Project Size: 65536 - Benchmark: Equation of State

- Device: CPU - Backend: Aesara - Project Size: 65536 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: Aesara - Project Size: 262144 - Benchmark: Equation of State

- Device: CPU - Backend: Aesara - Project Size: 262144 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: Aesara - Project Size: 1048576 - Benchmark: Equation of State

- Device: CPU - Backend: Aesara - Project Size: 1048576 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: Aesara - Project Size: 4194304 - Benchmark: Equation of State

- Device: CPU - Backend: Aesara - Project Size: 4194304 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: Numpy - Project Size: 16384 - Benchmark: Equation of State

- Device: CPU - Backend: Numpy - Project Size: 16384 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: Numpy - Project Size: 65536 - Benchmark: Equation of State

- Device: CPU - Backend: Numpy - Project Size: 65536 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: Numpy - Project Size: 262144 - Benchmark: Equation of State

- Device: CPU - Backend: Numpy - Project Size: 262144 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: Numpy - Project Size: 1048576 - Benchmark: Equation of State

- Device: CPU - Backend: Numpy - Project Size: 1048576 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: Numpy - Project Size: 4194304 - Benchmark: Equation of State

- Device: CPU - Backend: Numpy - Project Size: 4194304 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: JAX - Project Size: 16384 - Benchmark: Equation of State

- Device: CPU - Backend: JAX - Project Size: 16384 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: JAX - Project Size: 65536 - Benchmark: Equation of State

- Device: CPU - Backend: JAX - Project Size: 65536 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: JAX - Project Size: 262144 - Benchmark: Equation of State

- Device: CPU - Backend: JAX - Project Size: 262144 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: JAX - Project Size: 1048576 - Benchmark: Equation of State

- Device: CPU - Backend: JAX - Project Size: 1048576 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: JAX - Project Size: 4194304 - Benchmark: Equation of State

- Device: CPU - Backend: JAX - Project Size: 4194304 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: Numba - Project Size: 16384 - Benchmark: Equation of State

- Device: CPU - Backend: Numba - Project Size: 16384 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: Numba - Project Size: 65536 - Benchmark: Equation of State

- Device: CPU - Backend: Numba - Project Size: 65536 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: Numba - Project Size: 262144 - Benchmark: Equation of State

- Device: CPU - Backend: Numba - Project Size: 262144 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: Numba - Project Size: 1048576 - Benchmark: Equation of State

- Device: CPU - Backend: Numba - Project Size: 1048576 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: Numba - Project Size: 4194304 - Benchmark: Equation of State

- Device: CPU - Backend: Numba - Project Size: 4194304 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: PyTorch - Project Size: 16384 - Benchmark: Equation of State

- Device: CPU - Backend: PyTorch - Project Size: 16384 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: PyTorch - Project Size: 65536 - Benchmark: Equation of State

- Device: CPU - Backend: PyTorch - Project Size: 65536 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: PyTorch - Project Size: 262144 - Benchmark: Equation of State

- Device: CPU - Backend: PyTorch - Project Size: 262144 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: PyTorch - Project Size: 1048576 - Benchmark: Equation of State

- Device: CPU - Backend: PyTorch - Project Size: 1048576 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: PyTorch - Project Size: 4194304 - Benchmark: Equation of State

- Device: CPU - Backend: PyTorch - Project Size: 4194304 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: TensorFlow - Project Size: 16384 - Benchmark: Equation of State

- Device: CPU - Backend: TensorFlow - Project Size: 16384 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: TensorFlow - Project Size: 65536 - Benchmark: Equation of State

- Device: CPU - Backend: TensorFlow - Project Size: 65536 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: TensorFlow - Project Size: 262144 - Benchmark: Equation of State

- Device: CPU - Backend: TensorFlow - Project Size: 262144 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: TensorFlow - Project Size: 1048576 - Benchmark: Equation of State

- Device: CPU - Backend: TensorFlow - Project Size: 1048576 - Benchmark: Isoneutral Mixing

- Device: CPU - Backend: TensorFlow - Project Size: 4194304 - Benchmark: Equation of State

- Device: CPU - Backend: TensorFlow - Project Size: 4194304 - Benchmark: Isoneutral Mixing

PyTorch

- Device: CPU - Batch Size: 1 - Model: ResNet-50

- Device: CPU - Batch Size: 1 - Model: ResNet-152

- Device: CPU - Batch Size: 1 - Model: Efficientnet_v2_l

- Device: CPU - Batch Size: 16 - Model: ResNet-50

- Device: CPU - Batch Size: 16 - Model: ResNet-152

- Device: CPU - Batch Size: 16 - Model: Efficientnet_v2_l

- Device: CPU - Batch Size: 32 - Model: ResNet-50

- Device: CPU - Batch Size: 32 - Model: ResNet-152

- Device: CPU - Batch Size: 32 - Model: Efficientnet_v2_l

- Device: CPU - Batch Size: 64 - Model: ResNet-50

- Device: CPU - Batch Size: 64 - Model: ResNet-152

- Device: CPU - Batch Size: 64 - Model: Efficientnet_v2_l

- Device: CPU - Batch Size: 256 - Model: ResNet-50

- Device: CPU - Batch Size: 256 - Model: ResNet-152

- Device: CPU - Batch Size: 256 - Model: Efficientnet_v2_l

- Device: CPU - Batch Size: 512 - Model: ResNet-50

- Device: CPU - Batch Size: 512 - Model: ResNet-152

- Device: CPU - Batch Size: 512 - Model: Efficientnet_v2_l

- Device: NVIDIA CUDA GPU - Batch Size: 1 - Model: ResNet-50

- Device: NVIDIA CUDA GPU - Batch Size: 1 - Model: ResNet-152

- Device: NVIDIA CUDA GPU - Batch Size: 1 - Model: Efficientnet_v2_l

- Device: NVIDIA CUDA GPU - Batch Size: 16 - Model: ResNet-50

- Device: NVIDIA CUDA GPU - Batch Size: 16 - Model: ResNet-152

- Device: NVIDIA CUDA GPU - Batch Size: 16 - Model: Efficientnet_v2_l

- Device: NVIDIA CUDA GPU - Batch Size: 32 - Model: ResNet-50

- Device: NVIDIA CUDA GPU - Batch Size: 32 - Model: ResNet-152

- Device: NVIDIA CUDA GPU - Batch Size: 32 - Model: Efficientnet_v2_l

- Device: NVIDIA CUDA GPU - Batch Size: 64 - Model: ResNet-50

- Device: NVIDIA CUDA GPU - Batch Size: 64 - Model: ResNet-152

- Device: NVIDIA CUDA GPU - Batch Size: 64 - Model: Efficientnet_v2_l

- Device: NVIDIA CUDA GPU - Batch Size: 256 - Model: ResNet-50

- Device: NVIDIA CUDA GPU - Batch Size: 256 - Model: ResNet-152

- Device: NVIDIA CUDA GPU - Batch Size: 256 - Model: Efficientnet_v2_l

- Device: NVIDIA CUDA GPU - Batch Size: 512 - Model: ResNet-50

- Device: NVIDIA CUDA GPU - Batch Size: 512 - Model: ResNet-152

- Device: NVIDIA CUDA GPU - Batch Size: 512 - Model: Efficientnet_v2_l

QMCPACK

- Input: simple-H2O

- Input: Li2_STO_ae

- Input: FeCO6_b3lyp_gms

- Input: O_ae_pyscf_UHF

- Input: LiH_ae_MSD

- Input: H4_ae

Quantum ESPRESSO

- Input: AUSURF112

Quicksilver

- Input: CORAL2 P1

- Input: CORAL2 P2

- Input: CTS2

R Benchmark

RELION

- Test: Basic - Device: CPU

Remhos

- Test: Sample Remap Example

RNNoise

- Input: 26 Minute Long Talking Sample

Rodinia

- Test: OpenMP CFD Solver

- Test: OpenMP LavaMD

- Test: OpenMP Leukocyte

- Test: OpenMP Streamcluster

Scikit-Learn

- Benchmark: 20 Newsgroups / Logistic Regression

- Benchmark: Covertype Dataset Benchmark

- Benchmark: Feature Expansions

- Benchmark: GLM

- Benchmark: Glmnet

- Benchmark: Hist Gradient Boosting

- Benchmark: Hist Gradient Boosting Adult

- Benchmark: Hist Gradient Boosting Categorical Only

- Benchmark: Hist Gradient Boosting Higgs Boson

- Benchmark: Hist Gradient Boosting Threading

- Benchmark: Isolation Forest

- Benchmark: Isotonic / Perturbed Logarithm

- Benchmark: Isotonic / Logistic

- Benchmark: Isotonic / Pathological

- Benchmark: Kernel PCA Solvers / Time vs. N Components

- Benchmark: Kernel PCA Solvers / Time vs. N Samples

- Benchmark: Lasso

- Benchmark: LocalOutlierFactor

- Benchmark: SGDOneClassSVM

- Benchmark: Plot Fast KMeans

- Benchmark: Plot Hierarchical

- Benchmark: Plot Incremental PCA

- Benchmark: Plot Lasso Path

- Benchmark: Plot Neighbors

- Benchmark: Plot Non-Negative Matrix Factorization

- Benchmark: Plot OMP vs. LARS

- Benchmark: Plot Parallel Pairwise

- Benchmark: Plot Polynomial Kernel Approximation

- Benchmark: Plot Singular Value Decomposition

- Benchmark: Plot Ward

- Benchmark: Sparse Random Projections / 100 Iterations

- Benchmark: RCV1 Logreg Convergencet

- Benchmark: SAGA

- Benchmark: Sample Without Replacement

- Benchmark: SGD Regression

- Benchmark: Sparsify

- Benchmark: Text Vectorizers

- Benchmark: Tree

- Benchmark: MNIST Dataset

- Benchmark: TSNE MNIST Dataset

SHOC Scalable HeterOgeneous Computing

- Target: OpenCL - Benchmark: Bus Speed Download

- Target: OpenCL - Benchmark: Bus Speed Readback

- Target: OpenCL - Benchmark: Max SP Flops

- Target: OpenCL - Benchmark: Texture Read Bandwidth

- Target: OpenCL - Benchmark: FFT SP

- Target: OpenCL - Benchmark: GEMM SGEMM_N

- Target: OpenCL - Benchmark: MD5 Hash

- Target: OpenCL - Benchmark: Reduction

- Target: OpenCL - Benchmark: Triad

- Target: OpenCL - Benchmark: S3D

spaCy

SPECFEM3D

- Model: Layered Halfspace

- Model: Water-layered Halfspace

- Model: Homogeneous Halfspace

- Model: Mount St. Helens

- Model: Tomographic Model

TensorFlow

- Device: CPU - Batch Size: 1 - Model: VGG-16

- Device: CPU - Batch Size: 1 - Model: ResNet-50

- Device: CPU - Batch Size: 1 - Model: AlexNet

- Device: CPU - Batch Size: 1 - Model: GoogLeNet

- Device: CPU - Batch Size: 16 - Model: VGG-16

- Device: CPU - Batch Size: 16 - Model: ResNet-50

- Device: CPU - Batch Size: 16 - Model: AlexNet

- Device: CPU - Batch Size: 16 - Model: GoogLeNet

- Device: CPU - Batch Size: 32 - Model: VGG-16

- Device: CPU - Batch Size: 32 - Model: ResNet-50

- Device: CPU - Batch Size: 32 - Model: AlexNet

- Device: CPU - Batch Size: 32 - Model: GoogLeNet

- Device: CPU - Batch Size: 64 - Model: VGG-16

- Device: CPU - Batch Size: 64 - Model: ResNet-50

- Device: CPU - Batch Size: 64 - Model: AlexNet

- Device: CPU - Batch Size: 64 - Model: GoogLeNet

- Device: CPU - Batch Size: 256 - Model: VGG-16

- Device: CPU - Batch Size: 256 - Model: ResNet-50

- Device: CPU - Batch Size: 256 - Model: AlexNet

- Device: CPU - Batch Size: 256 - Model: GoogLeNet

- Device: CPU - Batch Size: 512 - Model: VGG-16

- Device: CPU - Batch Size: 512 - Model: ResNet-50

- Device: CPU - Batch Size: 512 - Model: AlexNet

- Device: CPU - Batch Size: 512 - Model: GoogLeNet

- Device: GPU - Batch Size: 1 - Model: VGG-16

- Device: GPU - Batch Size: 1 - Model: ResNet-50

- Device: GPU - Batch Size: 1 - Model: AlexNet

- Device: GPU - Batch Size: 1 - Model: GoogLeNet

- Device: GPU - Batch Size: 16 - Model: VGG-16

- Device: GPU - Batch Size: 16 - Model: ResNet-50

- Device: GPU - Batch Size: 16 - Model: AlexNet

- Device: GPU - Batch Size: 16 - Model: GoogLeNet

- Device: GPU - Batch Size: 32 - Model: VGG-16

- Device: GPU - Batch Size: 32 - Model: ResNet-50

- Device: GPU - Batch Size: 32 - Model: AlexNet

- Device: GPU - Batch Size: 32 - Model: GoogLeNet

- Device: GPU - Batch Size: 64 - Model: VGG-16

- Device: GPU - Batch Size: 64 - Model: ResNet-50

- Device: GPU - Batch Size: 64 - Model: AlexNet

- Device: GPU - Batch Size: 64 - Model: GoogLeNet

- Device: GPU - Batch Size: 256 - Model: VGG-16

- Device: GPU - Batch Size: 256 - Model: ResNet-50

- Device: GPU - Batch Size: 256 - Model: AlexNet

- Device: GPU - Batch Size: 256 - Model: GoogLeNet

- Device: GPU - Batch Size: 512 - Model: VGG-16

- Device: GPU - Batch Size: 512 - Model: ResNet-50

- Device: GPU - Batch Size: 512 - Model: AlexNet

- Device: GPU - Batch Size: 512 - Model: GoogLeNet

TensorFlow Lite

- Model: Mobilenet Float

- Model: Mobilenet Quant

- Model: NASNet Mobile

- Model: SqueezeNet

- Model: Inception ResNet V2

- Model: Inception V4

Timed HMMer Search

Timed MAFFT Alignment

Timed MrBayes Analysis

TNN

- Target: CPU - Model: DenseNet

- Target: CPU - Model: MobileNet v2

- Target: CPU - Model: SqueezeNet v1.1

- Target: CPU - Model: SqueezeNet v2

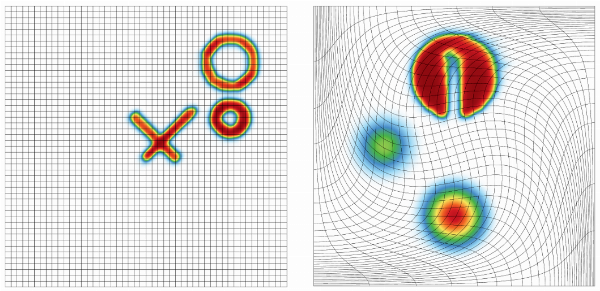

WarpX

- Input: Plasma Acceleration

- Input: Uniform Plasma

Whisper.cpp

- Model: ggml-base.en - Input: 2016 State of the Union

- Model: ggml-small.en - Input: 2016 State of the Union

- Model: ggml-medium.en - Input: 2016 State of the Union

Whisperfile

- Model Size: Tiny

- Model Size: Small

- Model Size: Medium

WRF

- Input: conus 2.5km

Xcompact3d Incompact3d

- Input: input.i3d 129 Cells Per Direction

- Input: input.i3d 193 Cells Per Direction

- Input: X3D-benchmarking input.i3d

XNNPACK

Revision History

Revision History

pts/hpc-1.1.12 Sun, 24 Nov 2024 10:47:23 GMT

Add warpx and epoch to HPC test suite.

pts/hpc-1.1.11 Sat, 27 Jan 2024 19:48:56 GMT

Add QuickSilver to HPC suite.

pts/hpc-1.1.10 Sat, 14 Oct 2023 20:33:55 GMT

Add easywave to test suite

pts/hpc-1.1.9 Sun, 06 Aug 2023 17:01:43 GMT

Add libxsmm, heffte, remhos, laghos, palabos, faiss, and petsc tests to HPC suite.

pts/hpc-1.1.8 Sat, 25 Mar 2023 18:15:31 GMT

Add specfem3d to HPC suite.

pts/hpc-1.1.7 Fri, 18 Nov 2022 14:55:03 GMT

Add nekrs and minibude to HPC test suite

pts/hpc-1.1.6 Thu, 13 Oct 2022 16:47:18 GMT

Add OpenRadioss to HPC test suite.

pts/hpc-1.1.5 Fri, 28 Jan 2022 07:50:31 GMT

Add Graph500 to HPC suite.

pts/hpc-1.1.4 Fri, 22 Oct 2021 15:23:06 GMT

Add PyHPC to HPC test suite.

pts/hpc-1.1.3 Tue, 27 Apr 2021 18:01:43 GMT

Add WRF to HPC test suite.

pts/hpc-1.1.2 Tue, 09 Mar 2021 11:24:37 GMT

Add hpl to suite.

pts/hpc-1.1.1 Mon, 18 Jan 2021 21:03:32 GMT

Add IOR to HPC suite for disk / I/O performance.

pts/hpc-1.1.0 Thu, 14 Jan 2021 13:53:57 GMT

Add new tests.

pts/hpc-1.0.0 Wed, 08 Apr 2020 15:08:51 GMT

Initial commit of HPC high performance computing benchmarks suite for easy access.